Over the past few months, I’ve been taking a course called Topics in Open Source Development at Seneca College. This post will be about my experience in the course.

Going into this course, I didn’t know much about it. I had read an online comment about how it is only useful for someone who is interested in staying heavily in open source development, and I would have to stay that this wasn’t true about the course I took. Although it was a good first dive into open source programming, I found that there were a lot of things in the course that had universal value.

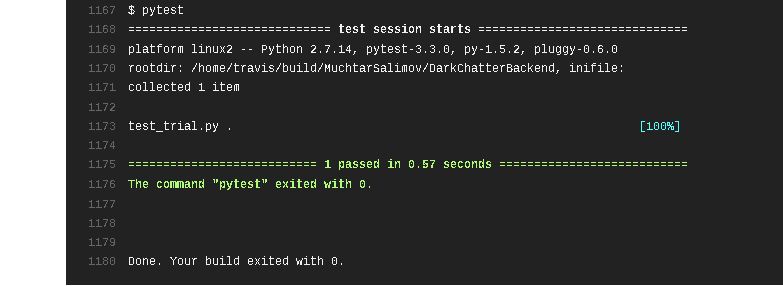

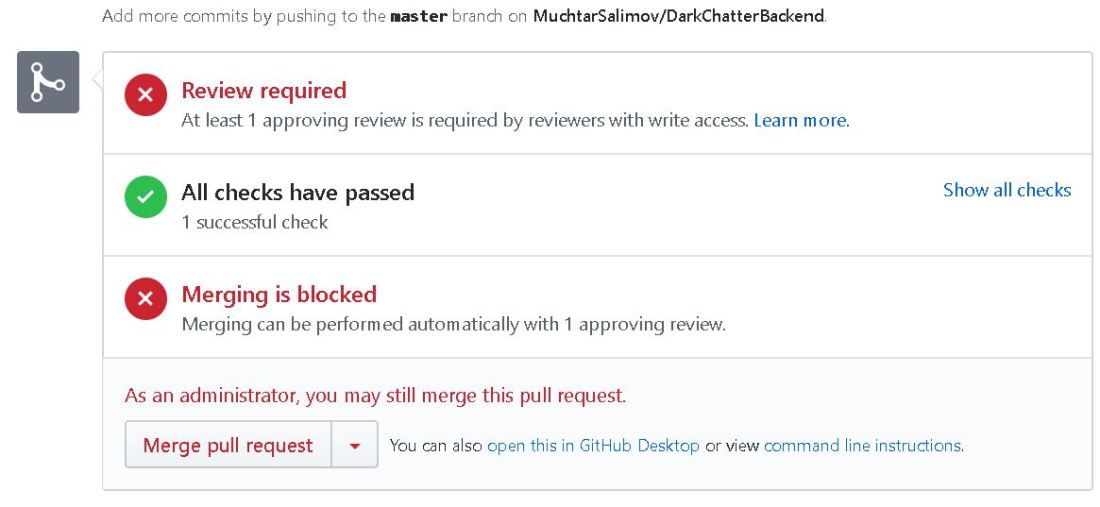

In fact, my favourite thing about the course had more to do with learning about the popular tools that facilitate collaboration on software projects. Things like learning to use git, github, and continuous integration tools. Even the methods of unifying code formatting was interesting.

These are all things that are quite popular in the industry, and necessary, to varying degrees. So much so, that I am shocked that I wouldn’t have learned them if I had not taking this professional option. I have already begun using git for my other courses, and it has made my life easier. Combined with all of the other tools, I feel like it has been one of the most valuable courses I haven taken at Seneca.

I’m not saying that I’m an expert in any of these things yet. But the curtain has parted, and I have taken my first steps. Those, I think, are the hardest ones. I feel like I am able to jump into any of these now, and deepen my understanding without being intimidated.

As for the open source part, I am glad I had a taste of it, but I am still not sure what to make of it. On the one hand it seems like a great way to get involved in programming, especially as an outsider. Joining big projects is a good way to gain experience. Creating small projects is a great way to turn the ideas you have into reality, too. But the decision to make a project open source versus keeping it private is one that I’m not sure how to make. Not only that, but if I do end up getting a job in industry, I wonder how much time I might have to dedicate to open source work. This is something that will answer itself in time.

The toughest part of the course for me has been in selecting projects. I know there are plenty of cool projects out there, but when it comes time to find them, I have no clue. Perhaps it is my lack of imagination, or my lack of awareness of what is going on in community. For example, a lot of the hot repositories on github seem to be machine-learning related, but I have yet to even dabble in that field.

In summary, I loved this course. It might not be possible to make every course in the same style. It’s open-ended style would not suit subjects where everyone need to know exactly the same thing, and be accountable for performing specific tasks perfectly. But, as a learning experience, I think it has seeded my growth in a big way, and will continue to benefit me for a long time.